TensorFlow Developer 筆記

DeepLearning.AI TensorFlow Developer 专业证书 (總課程連結)

Introduction to TensorFlow for Artificial Intelligence, Machine Learning, and Deep Learning

Convolutional Neural Networks in TensorFlow

Natural Language Processing in TensorFlow

Sequences, Time Series and Prediction

Introduction to TensorFlow for Artificial Intelligence, Machine Learning, and Deep Learning

Week 1 A New Programming Paradigm

Before you begin: TensorFlow 2.0 and this course

Introduction: A conversation with Andrew Ng

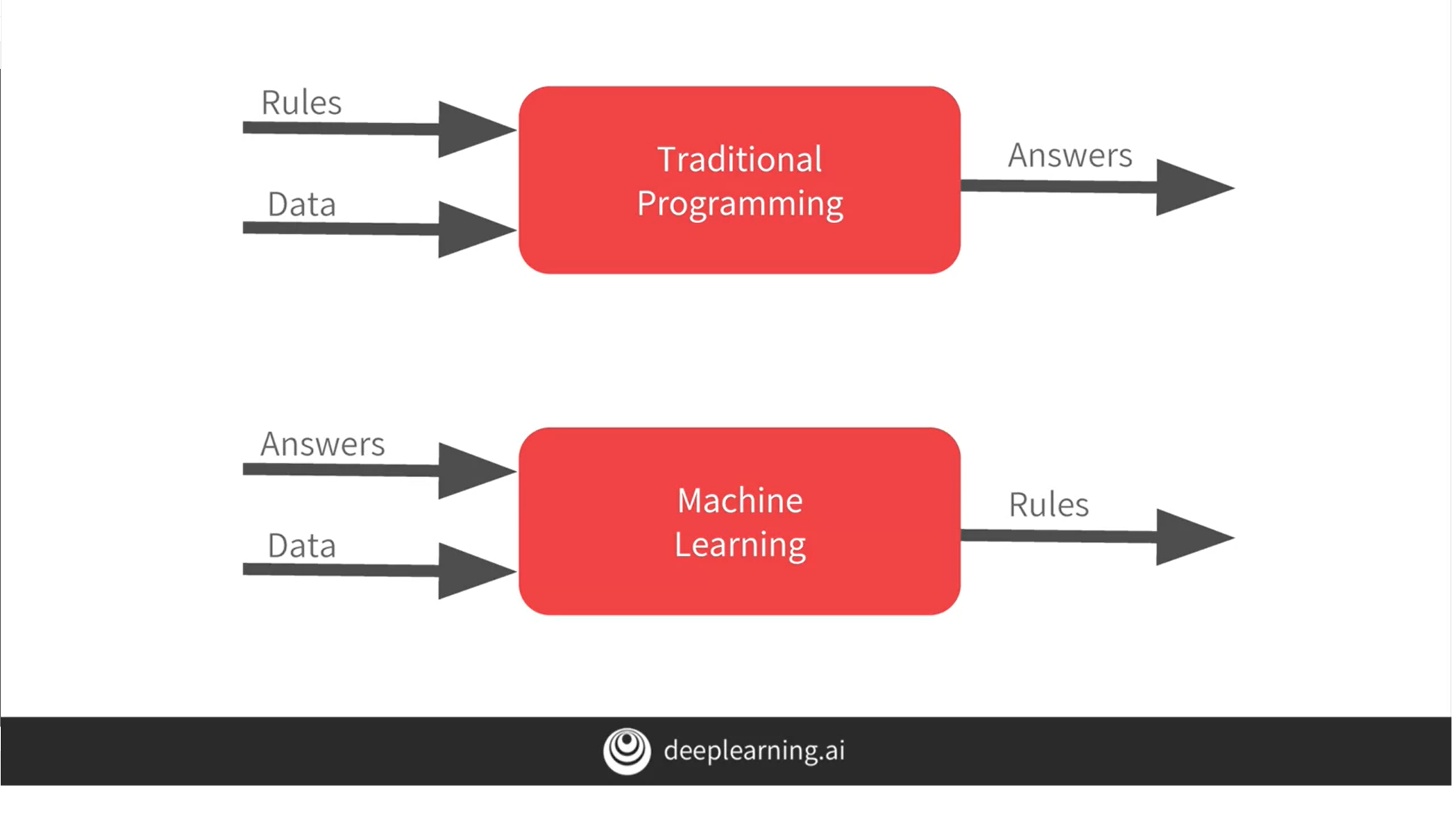

A primer in machine learning

The ‘Hello World’ of neural networks

1 | import tensorflow as tf |

From rules to data

Working through ‘Hello World’ in TensorFlow and Python

Try it for yourself

GitHub Course 1 - Part 2 - Lesson 2 - Notebook.ipynb

Colab Course 1 - Part 2 - Lesson 2 - Notebook.ipynb

Week 1 Quiz (升級後提交)

Weekly Exercise - Your First Neural Network

Introduction to Google Colaboratory

Get started with Google Colaboratory (Coding TensorFlow) - YouTube

Exercise 1 (Housing Prices) (升級後提交)

编程作业: Exercise 1 (Housing Prices) (升級後提交)

Week 1 Resources

Optional: Ungraded Google Colaboratory environment

Exercise 1 (Housing Prices)

Colab Exercise_1_House_Prices_Question.ipynb

Week 2 Introduction to Computer Vision

A Conversation with Andrew Ng

An Introduction to computer vision

Exploring how to use data

Writing code to load training data

The structure of Fashion MNIST data

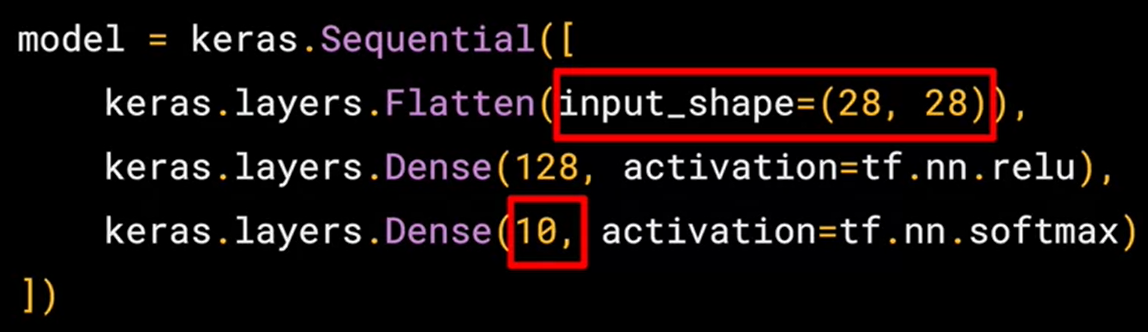

Coding a Computer Vision Neural Network

See how it’s done

Walk through a Notebook for computer vision

Get hands-on with computer vision

Colab Course 1 - Part 4 - Lesson 2 - Notebook.ipynb

1 | import tensorflow as tf |

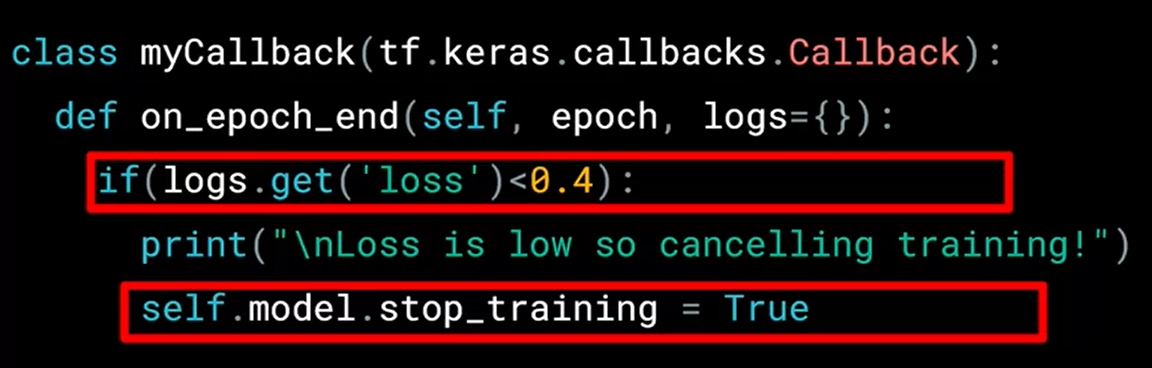

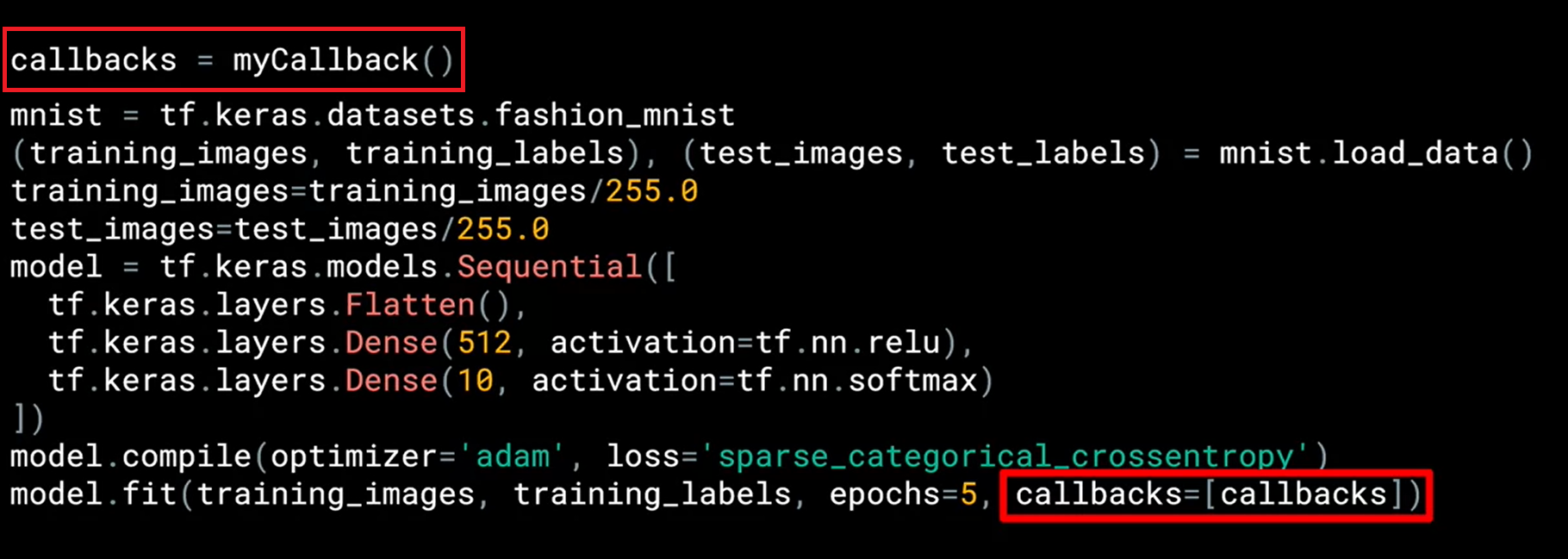

Using Callbacks to control training

See how to implement Callbacks

Colab Course 1 - Part 4 - Lesson 4 - Notebook.ipynb

1 | import tensorflow as tf |

Walk through a notebook with Callbacks

Week 2 Quiz (升級後提交)

Exercise 2 (Handwriting Recognition) (购买订阅以解锁此项目。)

编程作业: Exercise 2 (升級後提交)

Weekly Exercise - Implement a Deep Neural Network to recognize handwritten digits

Week 2 Resources

Beyond Hello, World - A Computer Vision Example

Exercise 2 - Handwriting Recognition - Answer

Optional: Ungraded Google Colaboratory environment

Exercise 2 (Handwriting Recognition)

Colab Exercise2-Question.ipynb

Week 3 Enhancing Vision with Convolutional Neural Networks

A conversation with Andrew Ng

What are convolutions and pooling?

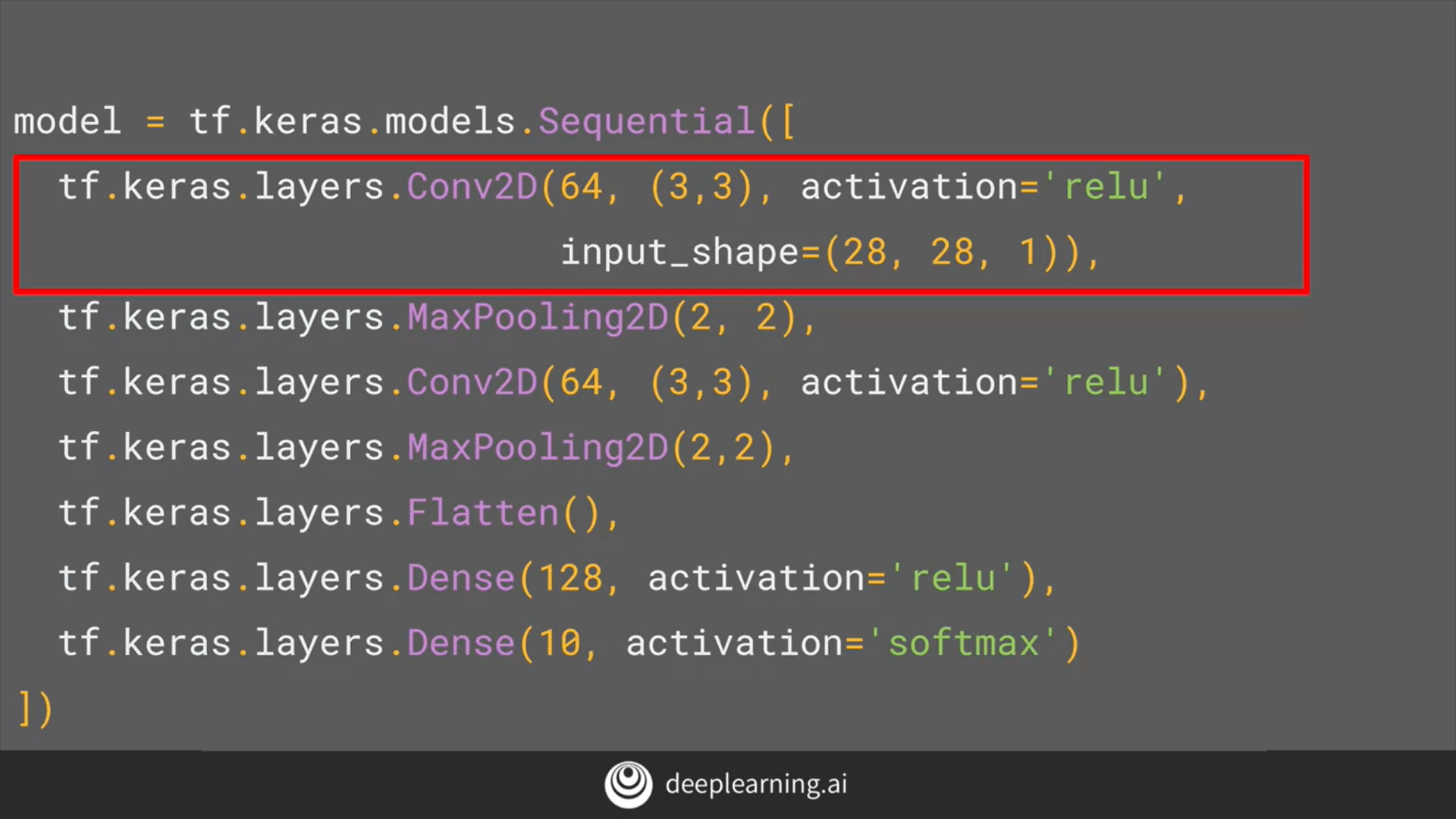

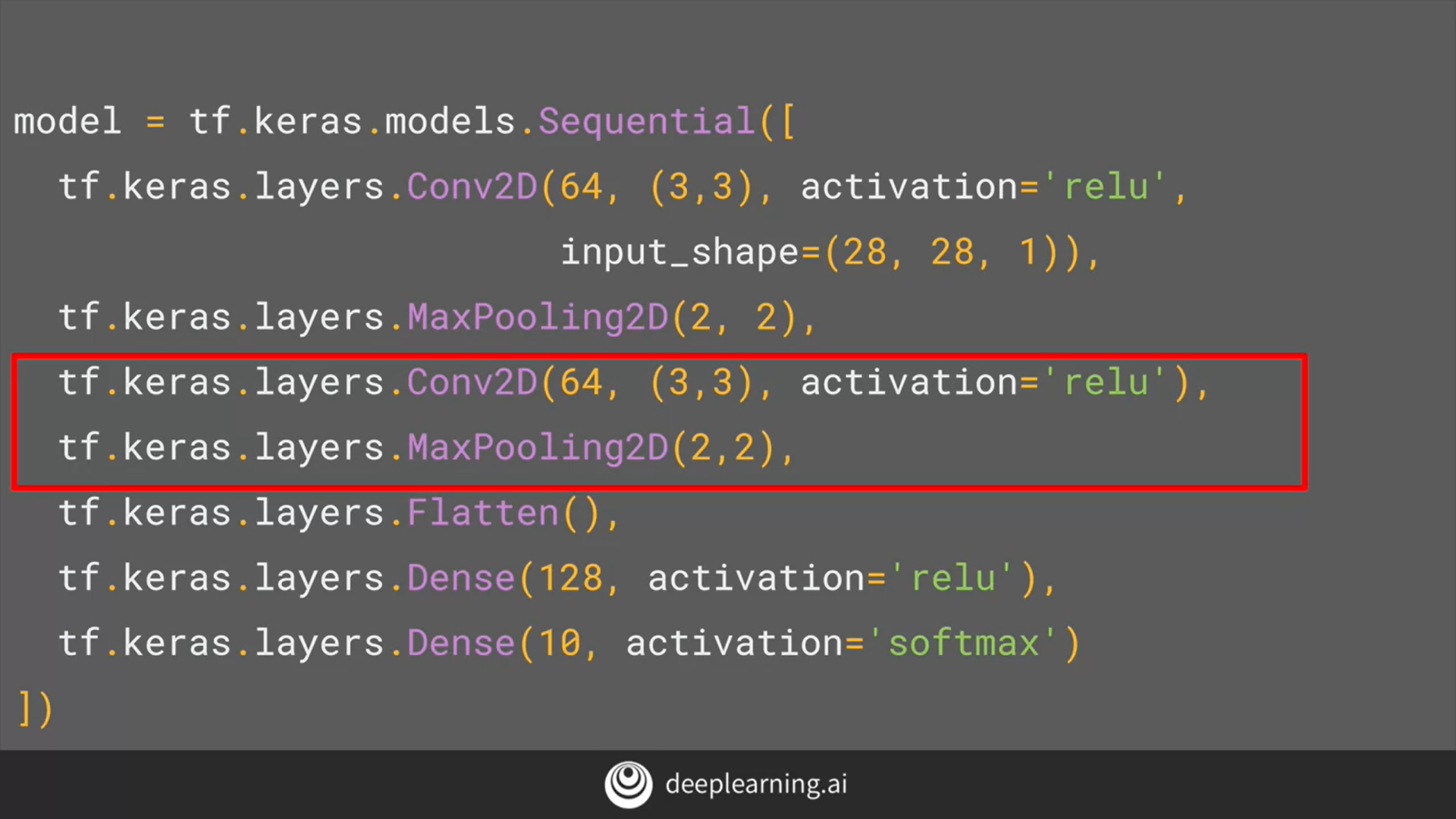

Coding convolutions and pooling layers

tf.keras.layers.Conv2D

tf.keras.layers.MaxPool2D

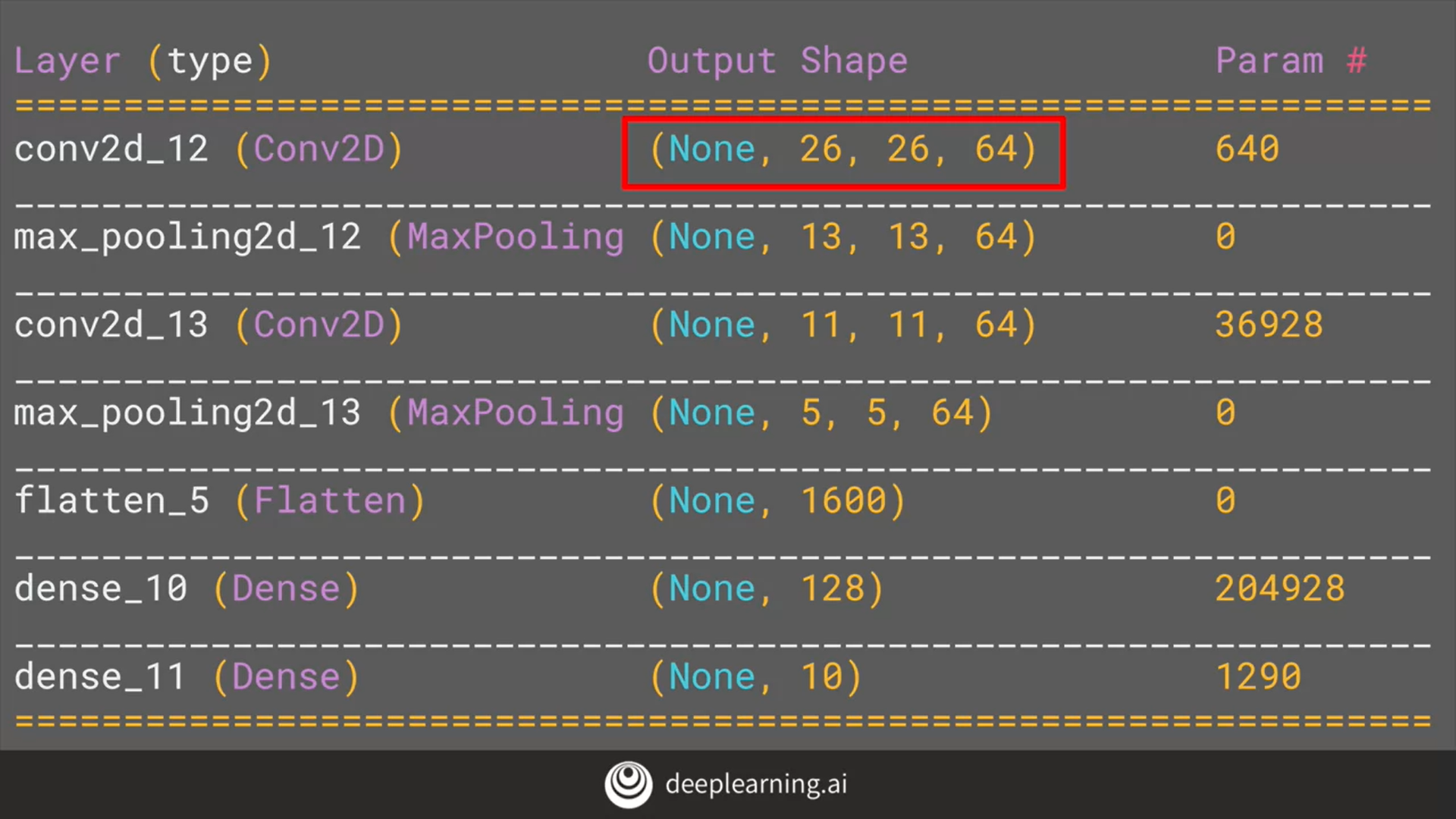

Implementing convolutional layers

- 64 個 3*3 的 filter

- beyond the scope of this class

- they aren’t random. They start with a set of known good filters in a similar way to the pattern fitting that you saw earlier

- relu 表示負值會被丟棄

- 1 表示顏色為灰階

- For more details on convolutions and how they work, there’s a great set of resources here.

Learn more about convolutions

Convolutional Neural Networks playlist

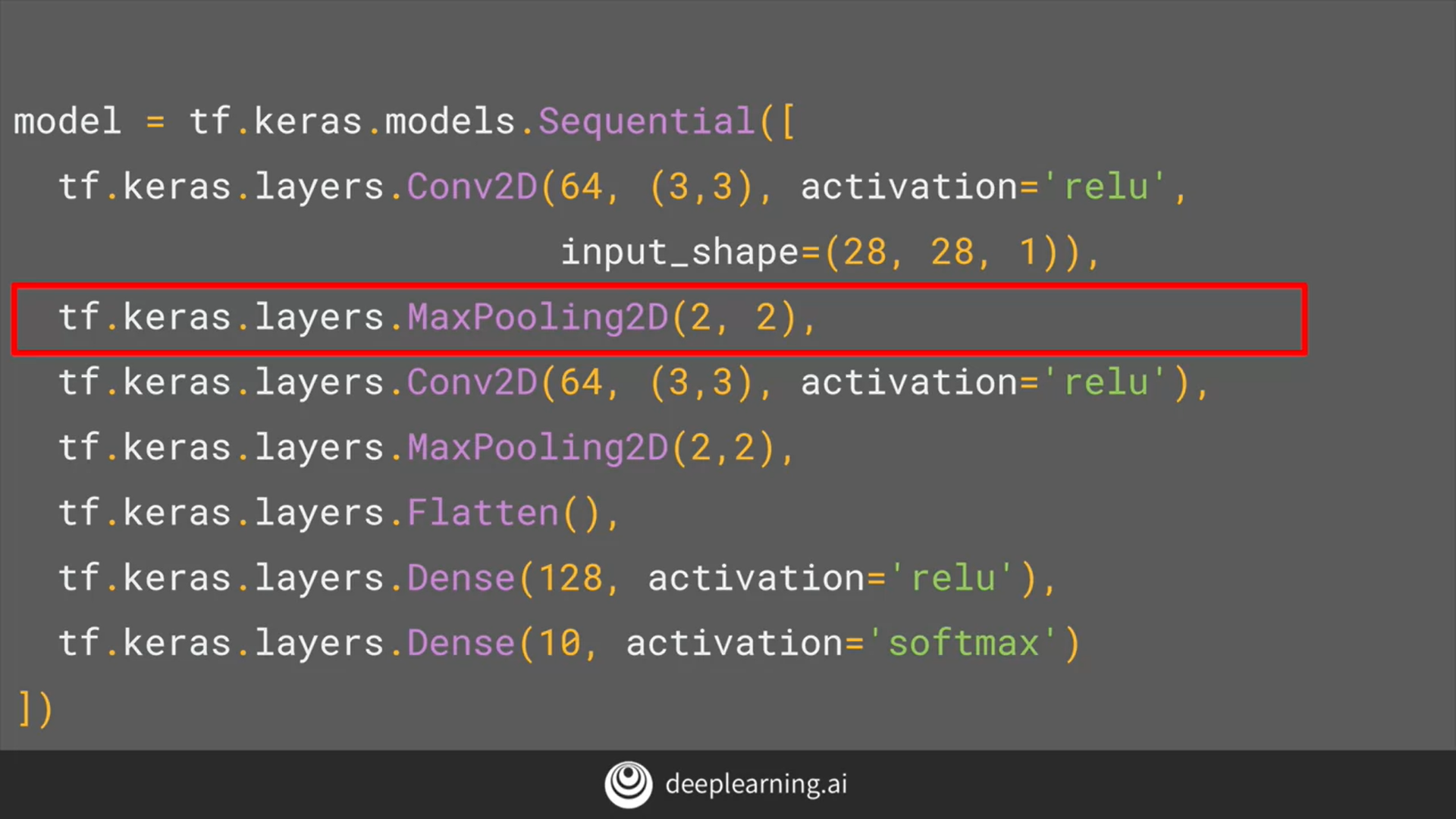

Implementing pooling layers

28->26

13->11

Getting hands-on, your first ConvNet

Improving the Fashion classifier with convolutions

Try it for yourself

Colab Course 1 - Part 6 - Lesson 2 - Notebook.ipynb

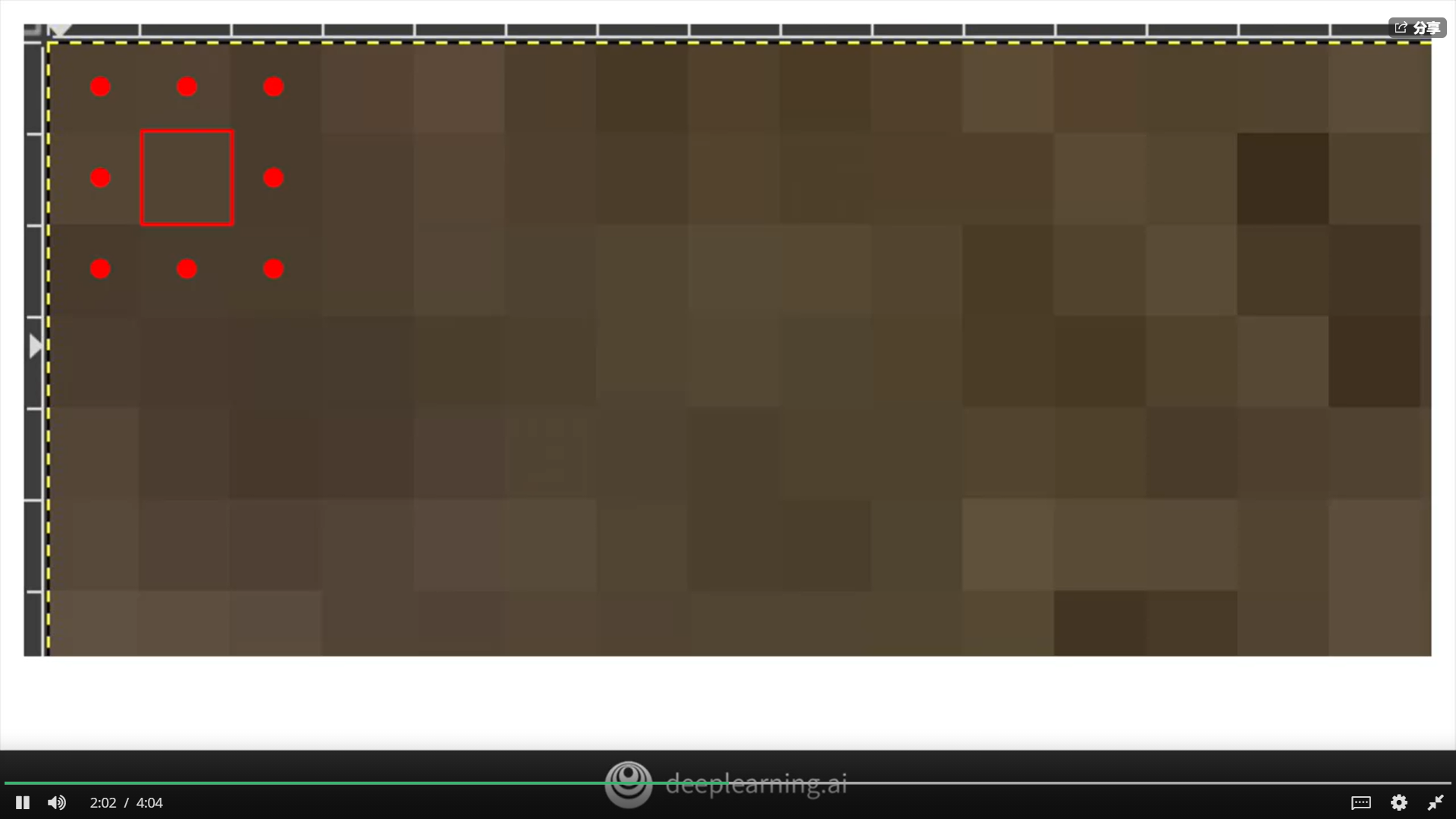

Walking through convolutions

Experiment with filters and pools

Colab Course 1 - Part 6 - Lesson 3 - Notebook.ipynb

Lode’s Computer Graphics Tutorial Image Filtering

Week 3 Quiz (升級後提交)

Weekly Exercise - Improving DNN Performance using Convolutions

Exercise 3 (Improve MNIST with convolutions) 购买订阅以解锁此项目。

编程作业: Exercise 3 (Improve MNIST with convolutions)) (升級後提交)

Week 3 Resources

Adding Convolutions to Fashion MNIST

Exploring how Convolutions and Pooling work

Optional: Ungraded Google Colaboratory environment

Exercise 3 - Improve MNIST with convolutions

(未完待續)